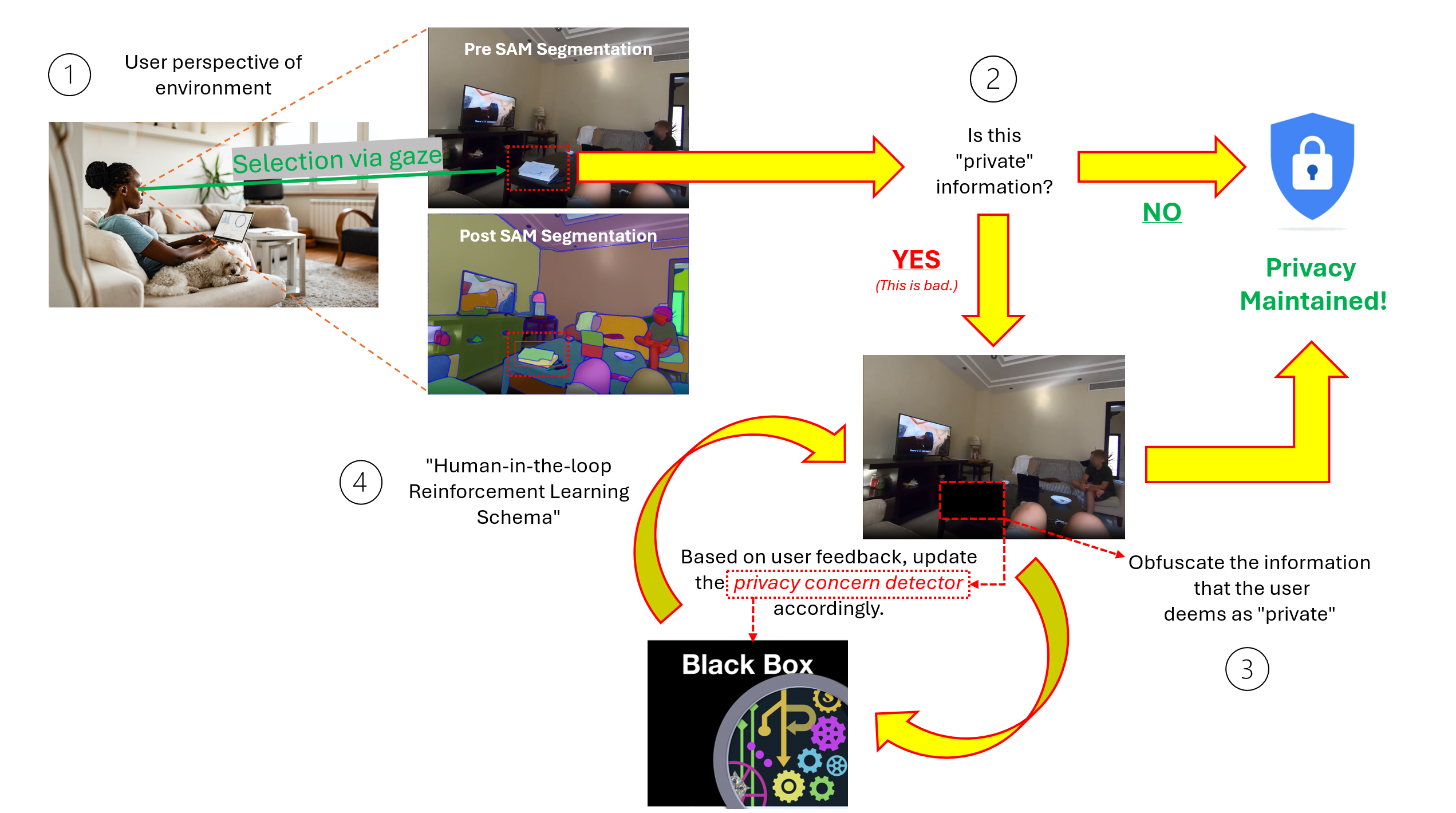

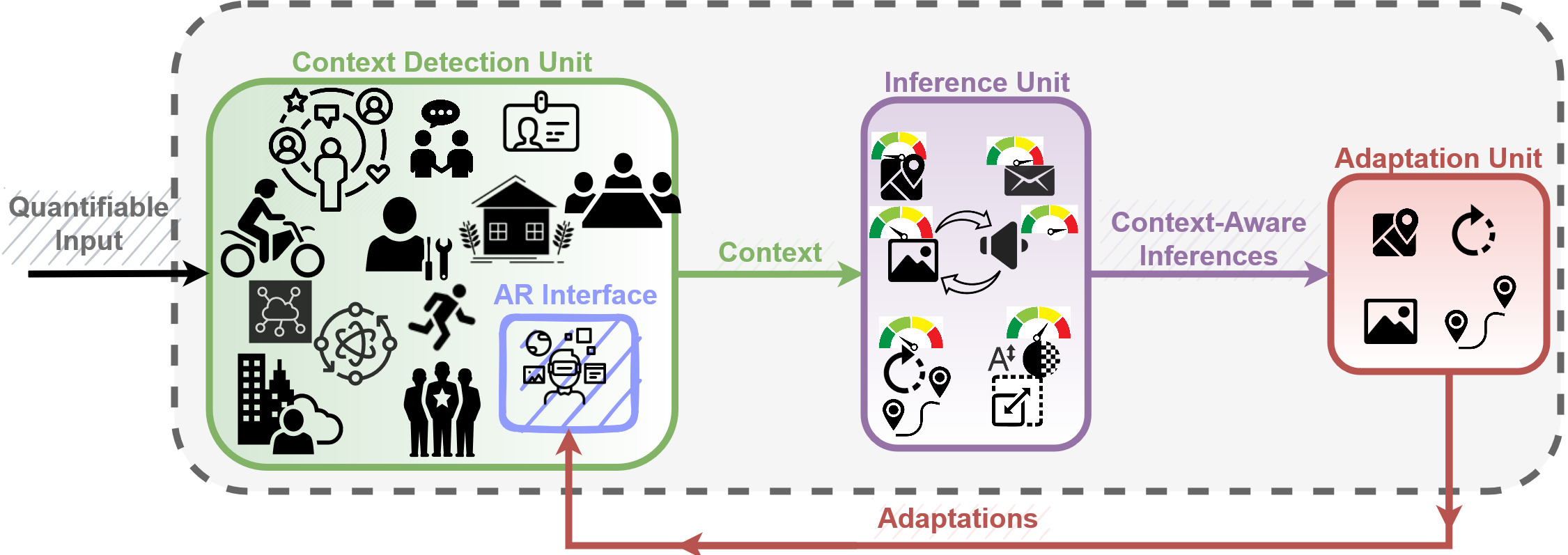

Fig 1: An overview of our approach to creating a interactive privacy-aware AI assistant for everyday AR In this work, we present a practical design framework for a privacy-aware virtual assistant for everyday AR, focusing on educating users with a lack of knowledge regarding technical and/or privacy literacy. Our approach features human-in-the-loop to learn privacy context, provides transparency into the system state of privacy detectors, and affords the user control and the ability to provide feedback to the system. The...

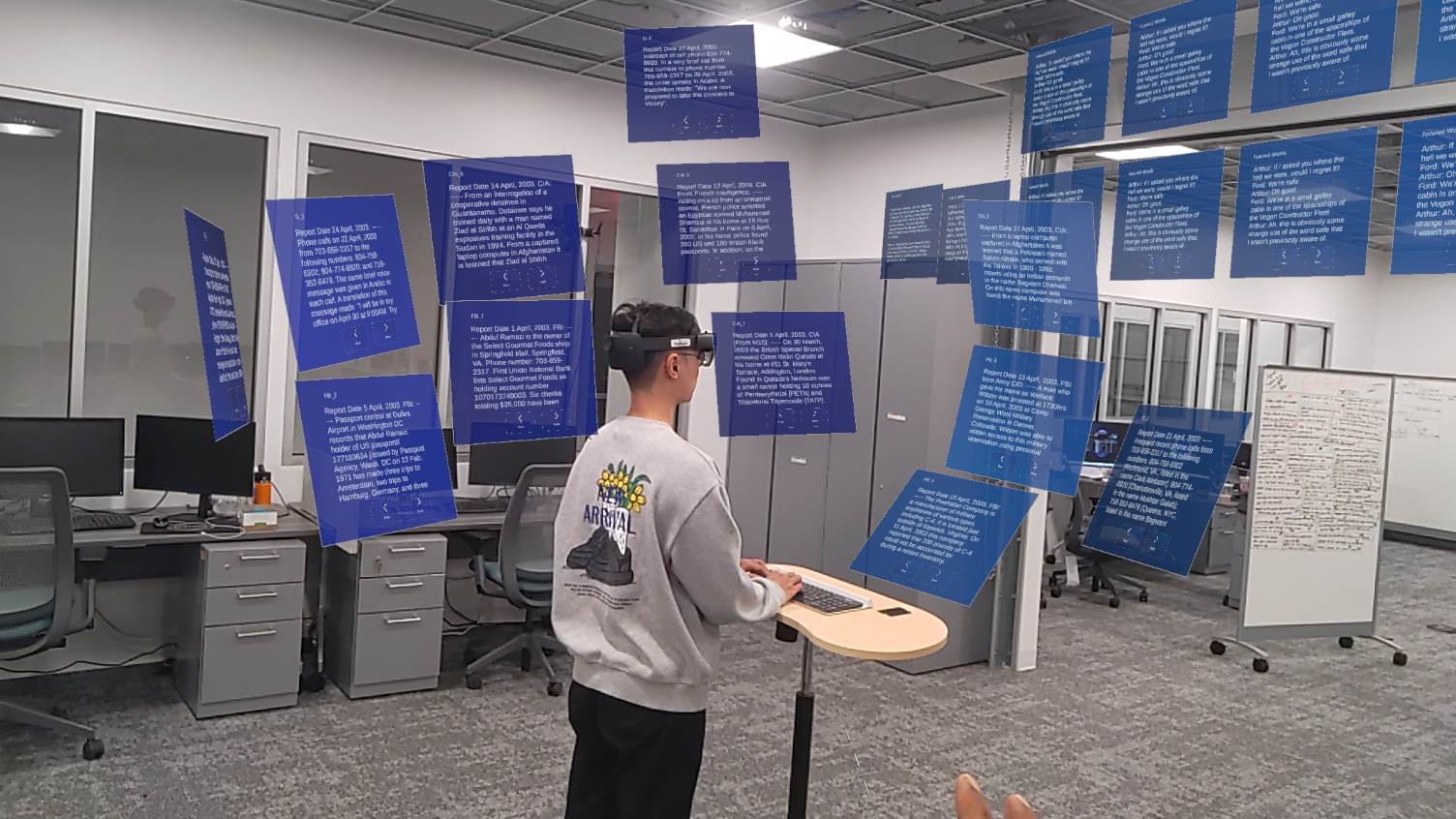

The 3D Interaction (3DI) Group performs research on 3D user interfaces (3D UIs) and 3D interaction techniques for a wide range of tasks and applications. Interaction in three dimensions is crucial to highly interactive virtual reality (VR) and augmented reality (AR) applications to education, training, gaming, visualization, and design. We also conduct empirical studies to understand the effects of immersion in VR and AR, the impact of natural and magic 3D interaction techniques, and usability and user experience in 3D UIs.

Our Team

Our Lab